Light Detection and Ranging (LiDAR) is now commonly used in autonomous vehicles. Students from AGH University of Science and Technology create a car detection system using LiDAR sensors and FPGA. The system classifies cars based on the data collected by LiDAR sensors.

LiDAR sensors, through a special optical system, emit the compound of the laser light with a specific wavelength and in a specific direction. Reflected from an obstacle, the beam is picked up by detectors located in the same device. Based on the time between sending the light and receiving it, the distance to the obstacle is calculated.

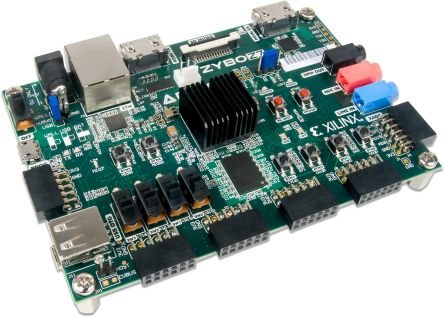

This project demonstrates that image & data processing can be done on heterogeneous platforms (FPGA + ARM) as efficiently as the processors. The hardware platform used is the Digilent Zybo Z7: Zynq-7000. The Zybo Z7 is a ready-to-use embedded software and digital circuit development board built around the Xilinx Zynq™-7000 family.

The Zynq-7000 tightly integrates a dual-core ARM Cortex-A9 processor with Xilinx 7-series Field Programmable Gate Array (FPGA) logic. A rich set of multimedia and connectivity peripherals make the Zybo Z7 a formidable single-board computer. A MIPI CSI-2 compatible Pcam connector, HDMI input, HDMI output, and high DDR3L bandwidth establish the Zybo Z7 as an affordable, yet capable, solution for high-end embedded vision applications.

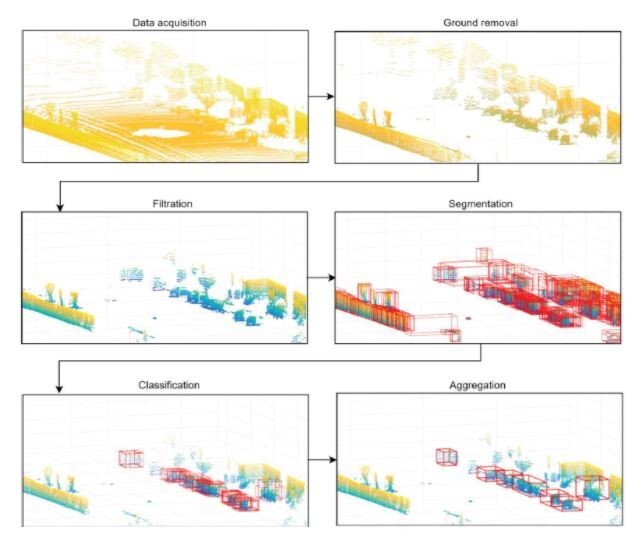

One of the most important elements in this system is data acquisition from the LiDAR sensor and camera. Students make use of point cloud data processing. Point cloud processing can be divided into several stages. The first stage is preprocessing, which includes ground removal, filtration, and background removal. The next stage is segmentation, which aims to divide the remaining point cloud into segments that contain potential objects. In the end, for each segment, features are extracted and classification is performed.

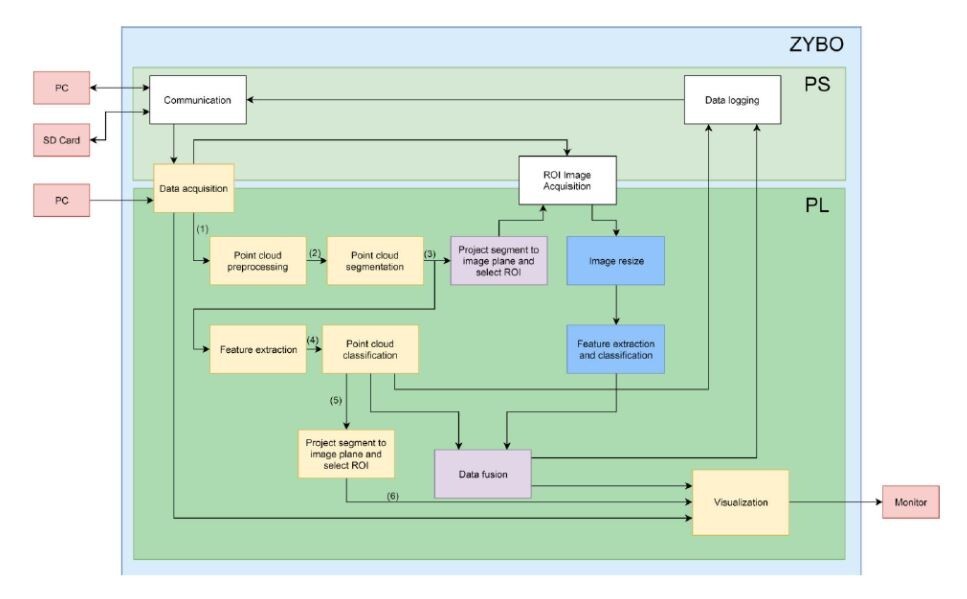

In the vision part of the system, the first step is to project segments to the image plane and select the corresponding regions of interest (ROI). The ROI is extracted and scaled. The final step is feature extraction and classification including Histogram of oriented gradients (HOG) and Support Vector Machine (SVM). The last part of the algorithm is data fusion. The classification score from both systems will be fused to obtain the final object detection probability. This approach was tested in the MATLAB environment and in the Vivado simulation.

The figure below represents the entire project design and feature design implementation. The numbers near the lines represent signals from the LiDAR data cells. The signals go through each step of the processing flow and get analyzed. This process indicates if a cell was or wasn’t removed during Point cloud preprocessing and it’s flagged valid or not. A pack of signals is transformed into a segment and then classified. Lastly, the segment is transformed into a bounding box, marked as an object, validated by the system, and shown on the monitor.

Today, creating a car detection system can be achieved using heterogeneous platforms too, as seen in this project. Using a LiDAR camera and sensors, connected to the Zybo Z7: Zynq-7000, people can start developing projects that can integrate with Adaptive Driver Assistance Systems (ADAS) at a low cost. Zybo Z7 is available in Farnell Denmark.